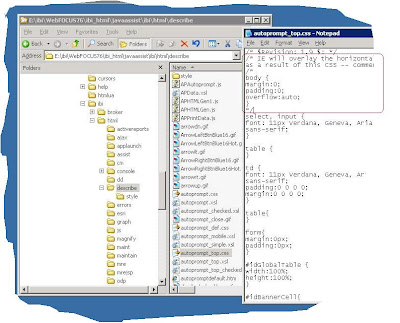

We published the paper at the Empirical Methods in Natural Language Processing (EMNLP). AutoPrompt is a simple application for Mac that sits on your Status Bar and asks you what are you up to, time to time. These results demonstrate that automatically generated prompts are a viable parameter-free alternative to existing probing methods, and as pretrained LMs become more sophisticated and capable, potentially a replacement for finetuning. We also show that our prompts elicit more accurate factual knowledge from MLMs than the manually created prompts on the LAMA benchmark, and that MLMs can be used as relation extractors more effectively than supervised relation extraction models. Note: This setting was implemented so that Managed Reporting prompting (IBIMRprompting) would be mutually exclusive from the amper autoprompt feature. Using AutoPrompt, we show that masked language models (MLMs) have an inherent capability to perform sentiment analysis and natural language inference without additional parameters or finetuning, sometimes achieving performance on par with recent state-of-the-art supervised models. To address this, we develop AutoPrompt, an automated method to create prompts for a diverse set of tasks, based on a gradient-guided search. Reformulating tasks as fill-in-the-blanks problems (e.g., cloze tests) is a natural approach for gauging such knowledge, however, its usage is limited by the manual effort and guesswork required to write suitable prompts. The remarkable success of pretrained language models has motivated the study of what kinds of knowledge these models learn during pretraining. In the following discussion, we assume we have access to a pretrained generative language model $p_\theta$.Welcome to the webpage for AutoPrompt, an automated prompt discovery algorithm to get langauge models to do what you want. Fine-tune the base model or steerable layers to do conditioned content generation.Optimize for the most desired outcomes via good prompt design.We also show that our prompts elicit more accurate factual. Using AutoPrompt, we show that masked language models (MLMs) have an inherent capability to perform sentiment analysis and natural language inference without additional parameters or finetuning, sometimes. This cache contains values assigned to parameters used in Autoprompt Reports, Embedded BI Applications, or any procedure that includes the FIND parameter. Computer Aided Instruction, Program Testing, Auto Prompt, Desktop Application, Microprompt Strategy, Assignment Free Microlectures, MIL Design Process. Using AutoPrompt, we show that masked language models (MLMs) have an inherent capability to perform sentiment analysis and natural language inference without additional parameters or finetuning, sometimes achieving performance on par with recent state-of-the-art supervised models. Apply guided decoding strategies and select desired outputs at test time. To address this, we develop AutoPrompt, an automated method to create prompts for a diverse set of tasks, based on a gradient-guided search.Each introduced method has certain pros & cons. Note that model steerability is still an open research question. How to steer a powerful unconditioned language model? In this post, we will delve into several approaches for controlled content generation with an unconditioned langage model. For example, if we plan to use LM to generate reading materials for kids, we would like to guide the output stories to be safe, educational and easily understood by children. Many applications would demand a good control over the model output. When generating samples from LM by iteratively sampling the next token, we do not have much control over attributes of the output text, such as the topic, the style, the sentiment, etc. We found 4 dictionaries with English definitions that include the word auto prompt: Click on the first link on a line below to go directly to a page where. Displays the parameters vertically at the left side of the page. Is the same as autoprompttop, but the Run in a new window check box is preselected. The state-of-the-art language models (LM) are trained with unsupervised Web data in large scale. Displays the parameters horizontally at the top of the page and is the default template value. There is a gigantic amount of free text on the Web, several magnitude more than labelled benchmark datasets.

0 kommentar(er)

0 kommentar(er)